Not I hasten to add a passing Native American but the server software that empowers a good deal of the interweb. Well the new site has been up for a couple of weeks with no major problems detected. I have however had some trouble trying to implement some of the Google Page Insight suggestions to improve the site speed and efficiency. This is mainly aimed at getting the site in a suitable state to use Google Adsense. As you can see I have put ads on the site and the main hope is that these will generate enough income to pay for the hosting. I don’t anticipate much in the way of posh cars or exotic hoidays!

Cache Control

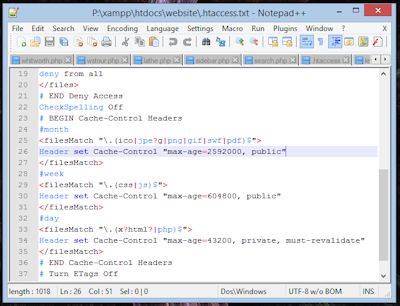

One of the things suggested is to “Leverage Caching”, what they mean is turn caching on. A bit of reading explains that pages cached locally by your browser make for quicker loading times. Unless told otherwise a browser like Firefox or Chrome will download the page and it’s content fresh every time you want to view it. If you set a few commands in your Apache .htaccess file you can tell browsers to save things locally and use them on subsequent visits to the page. There are a couple of different commands to do this one being Mod_Expires, which basically tells the browser how long to keep a file before downloading a fresh copy The other being Mod_Headers which does a similar job but with more options. I am a complete novice in this area and had to read a lot before I got a rough idea what to do. I think I have things set up with some fairly short term cache directives at the moment until I have finished playing with the site.

Whilst setting directives with the .htaccess file is OK for static files – images, css, script files and the like, it will not work for the php files which are of course generated dynamically. To affect caching for these files I discovered that you need to put a header directive at the top of each file that looks something like < ?php header('Cache-Control: max-age=604800'); ?> which must be the very first line on the page. This tells Apache to send HTML headers that allow caching for up to 7 days. In case you were wondering HTML headers are nothing to do with the page that appears in your browser window, they are rather part of the interchange that goes on transparently between your browser and the server (something else I learnt).

Compression

Having got caching sorted the next thing Google suggested was compressing pages using GZIP. Apparently all modern browsers are set up to ask for compressed pages, it’s in those HTML headers. The browser asks the server to send a page and says oh if you have it Gzipped I am quite happy to accept that, thankyou. Apache dutifully replies and if it can, squeezes the page before it goes, thus reducing the amount of data flying over the interweb. By this time I am an expert on rewriting the .htaccess file and duly add some Mod_Deflate instructions that are the standard way of telling Apache to GZIP everything it outputs. A quick test and… Nothing and definitely not ZIP. More reading and it transpires that my hosting company, 1 and 1, do not enable Mod_Deflate on their servers. Scratch head and send e-mail to Tech Support who reply quickly and apologetically saying I can use Zlib. Lots more reading.

Zlib is part of PHP and has, in my view, very poor documentation. Eventually I found out how to enable it using a php.ini file and switched it on. A quick check with Firefox Element Inspector showed that it was working. A more detailed look showed that it was working but the caching headers seemed to have switched themselves off. Now I am confused (it doesn’t take much), looking at Google Page Insights also showed I was getting 404 (page not found) errors, now I am really confused. Turn compression (Zlib) off and everything is working again. I did a few tests just to make sure I wasn’t seeing things but with Zlib on pages, except the home page, were still being served OK but with a 404 response and with the wrong HTML headers. I turned Zlib off and e-mailed Tech Support again, this time they seem to have headed for the hills! A couple of days reading, most of which was way over my head, I found one comment in the PHP documentation that suggested there was some vague bug where if you called Zlib with its standard zlib.output_compression = on it could corrupt headers but if you enabled zlib with a buffer size zlib.output_compression = 4096 it would work. I tried this without much hope but to my surprise it seems to have worked. I now have HTML headers with cache control set, Gzipped output for PHP and no 404 errors. Result!. I still need to sort out compressing CSS and JS but these files are already minified so they are not going to get much smaller.

Security

Whilst fighting Apache’s .htaccess I thought it would be a good idea to add some of the WordPress recommended security fixes. This meant playing with Mod_Rewrite. Now I have used this before and never understood it. as far as I can see Mod_Rewrite uses a language that is entirely written in punctuation marks and makes no sense whatsoever. I therefore resort to the time honoured method of finding something similar on the interweb and tweaking it until it works or explodes completely. This isn’t the best approach as Mod_Rewrite is very powerful and a slight error could have a myriad of unseen consequences. At this moment in time I seem fortuitously to have hit the right buttons. There are many articles regarding WordPress security so I wont go into detail save suggesting

An article in Smashing Magazine and the WordPress Codex.

There is still a slight problem with Google having some spurious links recorded but I think these came about when I was in the process of changing the domain name and I had three seperate domain names all pointing at the same site. Not a good idea, hopefully the duff links will drop off soon. I have no doubt that there are still some gremlins lurking in the works somewhere but they will eventually be tamed as per the Apache.